Faculty Spotlight: Yi Zhang on the Benefits of Adopting Immediate Feedback Assessment Techniques

Yi Zhang, Associate in the Discipline of Industrial Engineering and Operation Research (IEOR), was awarded a Provost’s Innovative Course Design grant to redesign his course, Simulation. Dr. Zhang worked closely with the CTL to implement a new in-class auto-grading system to help monitor student progress, offer more immediate feedback, and improve student engagement in his large lecture class.

Yi Zhang, Associate in the Discipline of Industrial Engineering and Operation Research (IEOR), was awarded a Provost’s Innovative Course Design grant to redesign his course, Simulation. Dr. Zhang worked closely with the CTL to implement a new in-class auto-grading system to help monitor student progress, offer more immediate feedback, and improve student engagement in his large lecture class.

In this spotlight, Yi discusses why he chose to implement this active learning technique, how he worked with the CTL, how it enhanced the student learning experience, how he measured success, and what advice he has for faculty interested in implementing active learning methods into their classrooms.

Please describe your course and the learning goals and objectives for students in it.

Simulation is a core course for graduate students receiving their MS in Operations Research and MS in Industrial Engineering in the Industrial Engineering and Operation Research Department. In this course, we introduce different simulation techniques with a focus on the application in the field of operation research and business analytics. Students explore simulation techniques both from the theoretical perspective and the application perspective. Besides aiming for a good grasp of simulation theories, students also need to develop the ability to solve real-life simulation problems using the programming skills that they learn. At the end of this course, students are expected to have proficiency in using Python to

- generate random samples from different statistical distributions based on various sampling methods

- implement system simulation in various real-world settings, including hospital, inventory, insurance, restaurant, etc, and provide business recommendations

- improve the efficiency of the system simulation based on important variance reduction methods

What were the main challenges/limitations of the previous iteration of your course?

Approximately 150 students enroll in this course each semester. Creating an active learning experience for a large class like this has been a challenge. During each lecture, students are given time to work on application-oriented coding questions on their laptops to help them better understand the important theories and methods of the class. Due to the large class size, it is difficult for me to monitor the progress of each student and to offer them immediate help. From the student perspective, they were not receiving valuable feedback while they were implementing their problem-solving process and had long waiting periods before receiving help. For me, as the instructor, I needed to find a way to track the progress of the class to understand their knowledge gaps and offer my help accordingly.

What is the intervention that you implemented and how does it enhance the student learning experience?

Auto-grading has been adopted in many courses for grading the assignments and exams. For this project, we developed an immediate feedback system by incorporating the auto-grading features into the lectures and making students the primary users of these features. For the in-class exercises, I worked with my teaching assistant, Achraf Bahamou, to program a Python script based on the rubrics we developed for each question. When working on the exercises, a student can submit their work electronically. The script will then run their response through various checkpoints to give them graded marks and provide individualized feedback based on the type of mistakes they made at those checkpoints. Students can then try to learn from the feedback and then correct their mistakes and submit their answers again. On the instructor side, I am able to observe the progress of the students, which is important for me to know where the students stand and adapt my teaching to student needs during the lesson..

Students were very excited about the auto-grading features and were able to use these features effectively to improve their work. In addition, since I have access to all the marked work, I can go over some of the student submissions with the names hidden during whole class discussions. Students were noticeably more engaged when seeing the work from their peers and actively engaging in the learning process from me explaining and correcting common mistakes.

What resources did you need to help you implement your intervention? (e.g. CTL support, software, hardware, etc.)

This project was an exhibition of great teamwork. Michael Tarnow, Learning Designer of Science and Engineering at CTL, was very involved in the whole pipeline of the project. This project greatly benefitted from his expertise in educational technology and his valuable insights into pedagogical practices. During the proposal stage, I received valuable feedback from Jessica Rowe, the Associate Director for Instructional Technology at the CTL. During the assessment stage, I worked closely with Melissa Wright, the Associate Director of Assessment and Evaluation at CTL and Megan Goldring, a Ph.D. student from the Department of Psychology and a CTL Teaching Assessment Fellow. Achraf Bahamou, a Ph.D. student from the IEOR Department, played an important role in implementing the auto-grading features. He also worked closely with me determining the rubrics of the practice questions and analyzing the assessment results.

On the software part, we first experimented with and then used EdStem, a digital learning platform. It included an auto-grading feature that was specifically designed to work in conjunction with Jupyter Notebooks. EdStem also allowed us to seamlessly integrate all the course content and the discussion board on one platform. The platform was unique in that almost all its tools, like the discussion board, were built to run code right in the feature itself. The platform co-founder, Scott Maxwell, was very open to experimenting with different features with us and offered great technical support, often making updates to the platform almost immediately to suit our needs.

Did you encounter any design or teaching challenges throughout the implementation?

First, there was an upfront cost of learning all the platform features and restructuring all the existing materials into Jupyter Notebook format that would also be well presented on the EdStem platform. This was a time-consuming process for the first iteration. However, this restructured course content so that it was much more organized and easier to follow from the learner’s perspective.

The second challenge came from the actual implementation of our new tool. Bringing the immediate feedback system into the lectures was a learning experience for all the stakeholders. During our first experiment, we received mixed feedback from the students. Based on the student feedback we collected from the post-lesson survey, we were able to improve the design and the execution of the intervention. As we moved to the later stages of the course, students became more comfortable with the auto-grading features and were able to gain great appreciation for the intervention .

How did you measure the success of this intervention?

We adopted an A/B testing approach by randomly assigning the students into two groups: a treatment group and a control group. Students in the treatment group received the invention, while students in the control group did not. To be fair to the students we would flip who was in the treatment and control groups in the following lesson. In total this experiment was conducted in two sets of two lessons (4 total lessons) at different stages of the course. After each experiment, we used subjective and objective measurements to evaluate the success of the intervention.

- Subjective measurement: After each exercise, students filled in a short survey hosted on Qualtrics. The goal was to collect their opinions about the problems so they could reflect on their experience with the auto-grading features, and the impact of the intervention on their learning (how confident they felt about the subject matter). Collecting the subjective feedback allowed us to see how the students reacted to the intervention and helped us improve our work from the learner perspective.

- Objective measurement: We compared student scores from the developed questions to see whether there was any significant difference between students in the control group and the treatment group. This testing offers us an objective measurement of the outcome of the intervention.

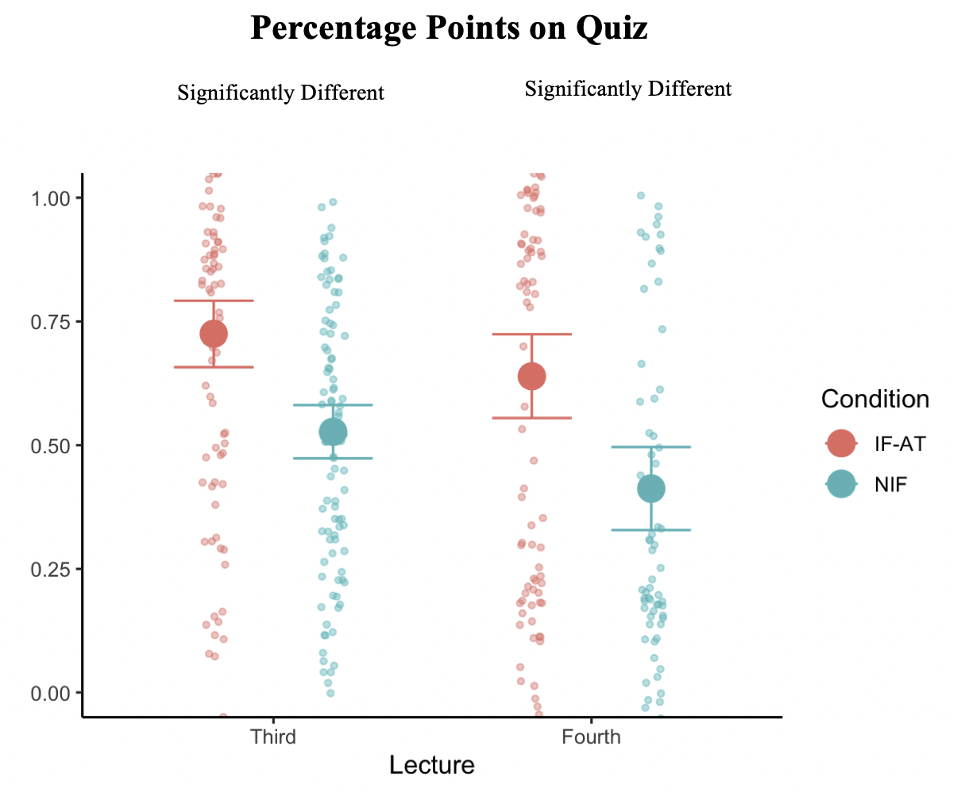

Based on our assessment, students with access to the auto-grading features were more confident in the subject matter. Across two lectures, students were assigned to either normal Python-based feedback (NIF) or the auto-grading feedback tool (IF-AT), so that half of the students were in the NIF condition in the third lecture and half of the students were in the auto-grading condition in the fourth lecture. From our objective measurement, we observed that students with access to the auto-grading features received a grade that was 30-50% higher on average than those who did not. (All differences were statistically significant with p values < 0.015.). See graph below.

For which type of classes do you see faculty being able to use this intervention? Do you have any advice for them?

Faculty members who are using the flipped-class teaching method or hoping to incorporate some programming exercises in a classroom will find this intervention extremely useful. Being able to create active learning experiences for students has been a challenge for teaching and learning in large classes. Having a learning environment where students can get immediate feedback in the classroom can help the students better engage with the content and detect any misunderstandings early on. It also allows the instructors to collect valuable information from this system to help them improve their teaching.